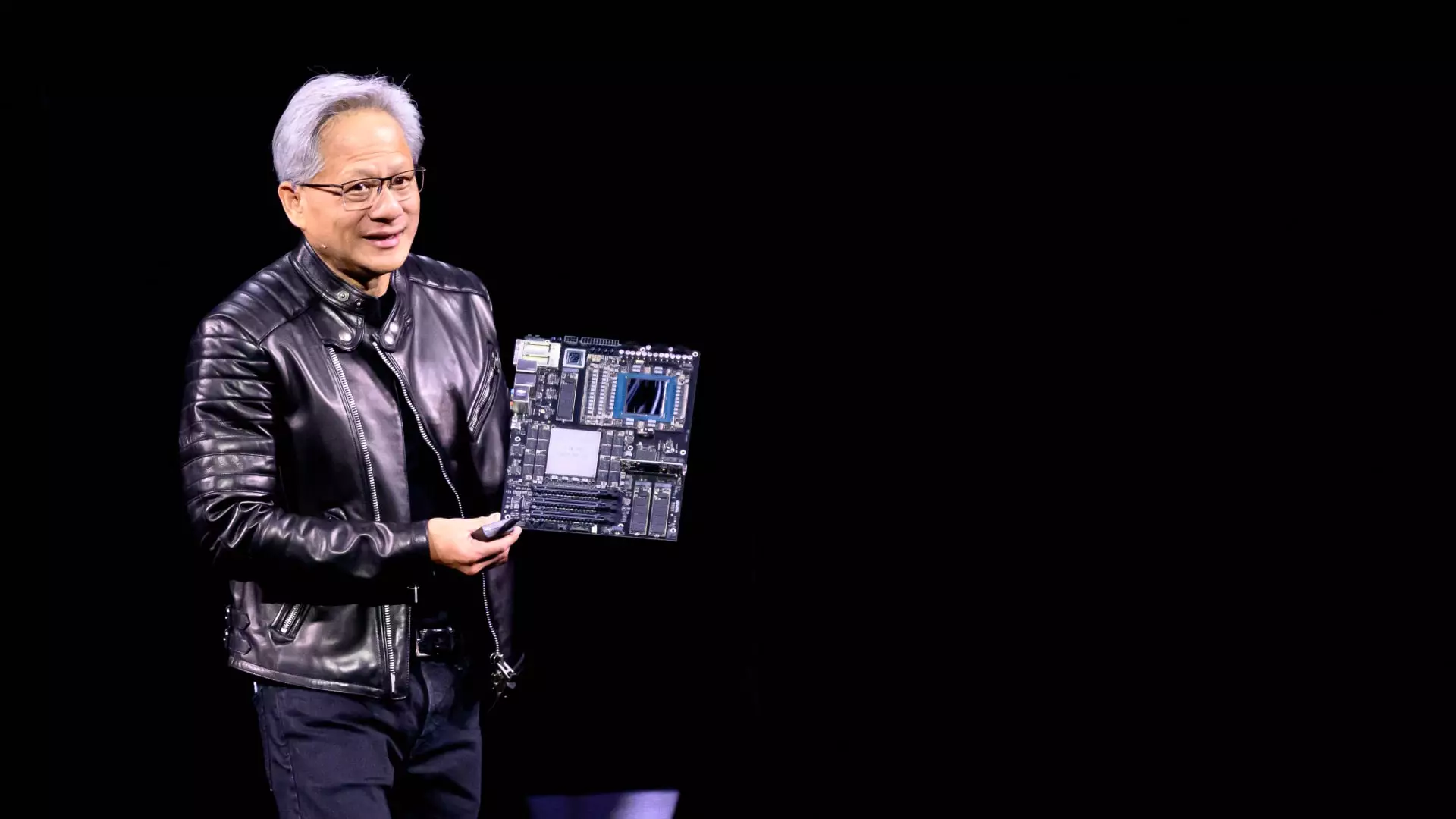

Nvidia’s recent GTC keynote, delivered by CEO Jensen Huang, had an unmistakable vibe: urgency. This urgency stems from his emphasis on acquiring the fastest chips that Nvidia churns out, suggesting that this is the golden ticket for companies diving into the world of artificial intelligence (AI). Huang’s pitch seemed to suggest that faster chips would eliminate the concerns of clients regarding costs and return on investment, implying a straightforward correlation between speed and value.

However, while speed is undoubtedly vital in the tech realm, it’s important to question the sustainability of this narrative. The belief that faster chips are an automatic cost-reduction solution neglects potential underlying factors that drive AI development and deployment costs. Continuous investment in infrastructure may not guarantee the promised returns, especially in a marketplace as volatile and unpredictable as AI. The real challenge lies not just in speed but in how well companies can adapt their strategies around these advancements.

The Economic Reality of AI Chips

During the keynote, Huang took to the stage to perform “envelope math,” bringing the economics of hyperscale advancements into the spotlight. While it was an impressive display, it raises an important question: does simplifying complex financial models into bite-sized anecdotes gloss over the intricate economic challenges at play? The Blackwell Ultra systems, with claims of generating 50 times more revenue than its predecessor, the Hopper systems, present a preposterous allure, yet such projections are often laden with optimistic assumptions.

Investors are already on high alert regarding the spending habits of the major cloud providers. With Nvidia’s Blackwell GPUs reportedly priced at around $40,000 each, the potential return on investment is anything but guaranteed. Critics might argue that the enthusiasm surrounding these numbers blinds many to the real-world constraints companies face in adopting these technologies. With such steep costs, the expectation that cloud providers will continue to splurge on AI chips requires a suspension of disbelief.

The Cloud Providers’ Dilemma

There’s a notable tension between Nvidia and the four major cloud providers—Microsoft, Google, Amazon, and Oracle. As the race heats up for AI supremacy, these behemoths may hesitate to continue their rapid capital expenditures given the steep financial commitments that Nvidia’s chips involve. Swelling anxiety about their sustainability raises critical questions: How long can these companies sustain such spending? What happens when the frenzy dies down, and these massive investments fail to deliver anticipated technologies or growth?

Huang’s dismissal of custom chips from these cloud providers seems somewhat presumptuous. While he downplays their potential, it’s hard to ignore that innovation often springs from unexpected places. Suggesting that custom ASICs won’t succeed just because they must “be better than the best” seems to undermine the nature of technological evolution, where flexibility and versatility might ultimately redefine market dynamics.

Future-Proofing the AI Infrastructure

Nvidia’s announcements about future AI systems, such as Rubin Next and Feynman chips, spark optimism about the road ahead. Huang’s assertions about projected budget approvals for hundreds of billions of dollars in AI infrastructure represent a tantalizing vision. But it’s prudent to remain skeptical about whether this expanded roadmap accurately reflects market needs or simply Nvidia’s aspirations.

The assumption that cloud providers will systematically integrate Nvidia’s latest offerings overlooks the complexities inherent in long-term tech planning. Building superior AI infrastructures involves numerous strategic elements—demand, user engagement, programming, and more. Thus, the question doesn’t just revolve around “What do you want for several hundred billion dollars?” but rather “What long-term strategies do cloud providers need to consider to make their investments sustainable?”

Final Thoughts on Nvidia’s Road Ahead

The fundamental premise that speed equals value in the AI sphere, while compelling, is fraught with uncertainties. Nvidia’s focus on speed may ultimately become a double-edged sword—one that could expose companies, particularly the cloud giants, to unforeseen risks and challenges. As tech evolves at an accelerated pace, it’s essential for stakeholders to maintain a broader perspective, seeking not just the fastest chips, but the right solutions tailored for sustainable growth and competitive longevity. The intersection between speed and reliability could hold the key to AI’s future, and it warrants careful consideration ahead.